Overview

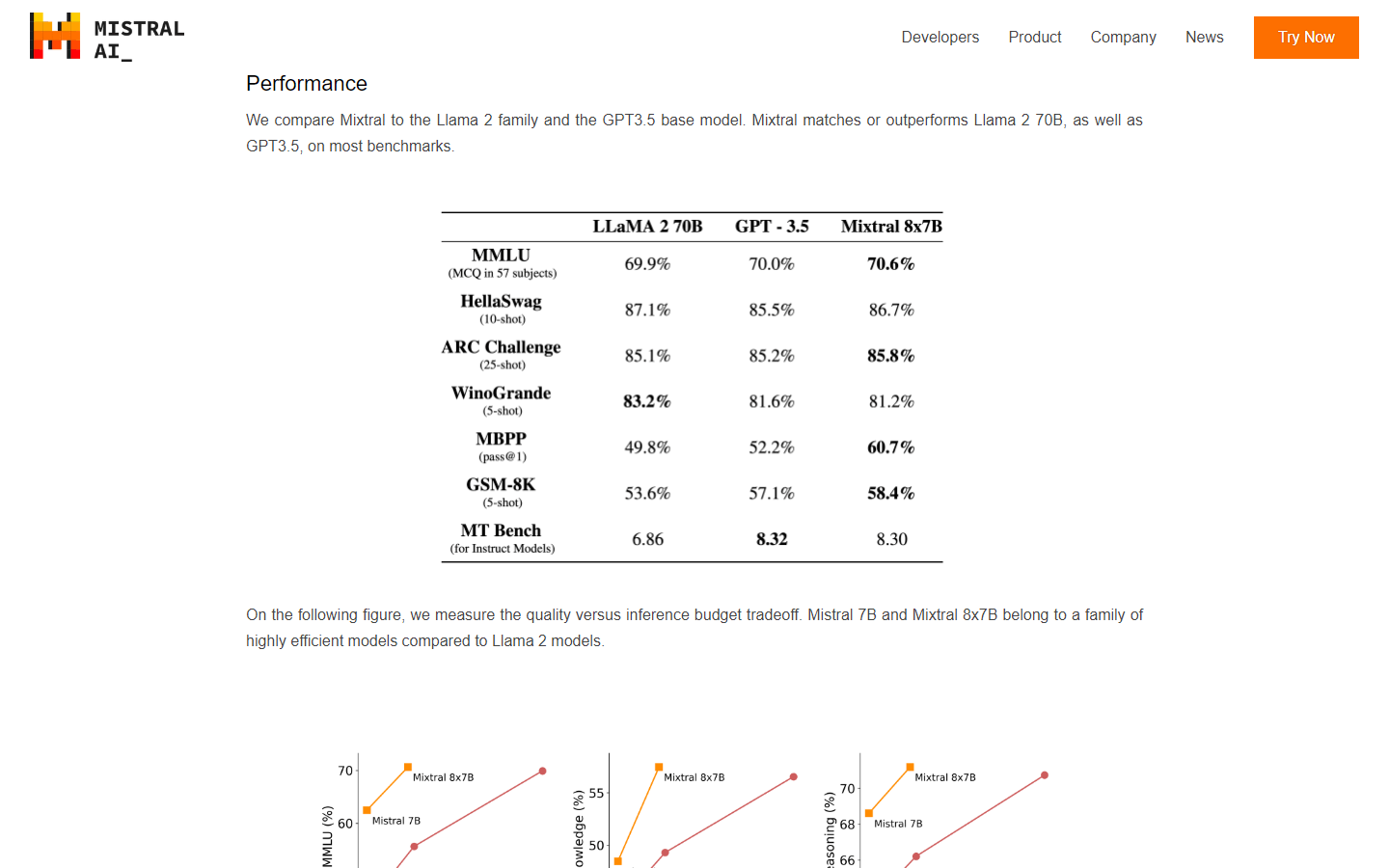

Mixtral 8x7B is a state-of-the-art sparse mixture of expert models (SMoE) large language model developed by Mistral AI. It contains 46.7 billion total parameters but performs inference at the same speed and cost as smaller models. Mixtral 8x7B surpasses Llama 2 70B and GPT-3.5 in various benchmarks and supports a context length of 32k tokens. Furthermore, it demonstrates remarkable performance across multiple languages, including French, German, Spanish, and Italian, without any noticeable connection between experts and token domain selection.

The model is designed to work seamlessly with popular optimization tools such as Flash Attention 2, bitsandbytes, and PEFT libraries. Additionally, Mistral AI provides an instruction-fine-tuned version called "mistralai/Mixtral-8x7B-v0.1" for conversational applications. Mixtral 8x7B is licensed under the Apache 2.0 license and is publicly available for download on the Hugging Face Hub.

Core Features

Sparse Mixture of Experts (SMoE): Mixtral uses SMoE technology, which allows it to have significantly more parameters than its actual size during inference. This design enables efficient utilization of computational resources while maintaining high performance levels.

High Performance: Despite having only 8% of the number of parameters compared to other top language models like Llama 2 70B or GPT-3.5, Mixtral 8x7B delivers superior results in several NLP tasks.

Multilingual Support: Mixtral excels not just in English but also in other major European languages like French, German, Spanish, and Italian. Its ability to handle different languages comes from the uncorrelated choice of routing functions that connect experts and token domains.

Optimized Hardware Utilization: Mixtral's implementation takes advantage of modern hardware accelerators through support for mixed precision training and quantization techniques. These optimizations help reduce memory usage and improve overall latency and throughput.

Integration with Popular Optimization Tools: Mixtral works well with widely used libraries like Flash Attention 2, bitsandbytes, and PEFT. Integrating these tools enhances the efficiency and adaptability of the model.

Apache License 2.0 Compliance: Mixtral is freely accessible and distributed under the permissive Apache License 2.0. Users can easily modify, distribute, and use it for both commercial and non-commercial purposes.

Publicly Available: You can find Mixtral 8x7B on the Hugging Face Model Hub along with pretrained weights for direct application in your projects.

Instruction Fine-Tuning: Mistral offers an additional fine-tuned variant named 'mistralai/Mixtral-8x7B-v0.1', specifically tailored for conversational scenarios using instructions. This helps ensure better alignment between user intent and generated responses.

Use Cases

Content Generation: Use Mixtral to create engaging blog posts, articles, news summaries, social media updates, or even entire books based on specific themes, topics, styles, or guidelines.

Customer Service Chatbots: Implement Mixtral in customer service chatbots to provide quick, accurate, and helpful answers to customers' questions or concerns in multiple languages.

Translation Services: Leverage Mixtral's multilingual abilities to develop translation services that translate text accurately and idiomatically between different languages.

Language Learning Tools: Build interactive learning platforms for users to practice foreign languages by generating personalized dialogues, vocabulary exercises, grammar drills, and quizzes.

Text Summarization & Paraphrasing: Apply Mixtral to condense long documents into shorter summaries or rephrase existing content to make it suitable for different audiences or channels.

Market Research Analysis: Analyze market trends, consumer opinions, competitor activities, and industry reports to extract valuable insights and generate recommendations for businesses.

Creative Writing Assistance: Empower writers by providing suggestions for character development, plot progression, setting descriptions, dialogue enhancement, and stylistic improvements.

Code Review & Documentation: Automate code review processes and documentation generation for programming languages supported by Mixtral, improving software quality and maintainability.

Legal Documents Processing: Extract relevant information, identify clauses, redline changes, and compare versions of legal contracts, agreements, and other documents.

Academic Search & Information Retrieval: Streamline academic research by identifying pertinent literature, extracting essential data points, and synthesizing findings within specific disciplines or interdisciplinary fields.

Pros & Cons

Pros

High accuracy in understanding context

Excellent performance in multilingual tasks

Efficient resource allocation via SMoE

Superior outcomes vs. larger models

Supports extended context lengths

Works great with popular optimization tools

Cons

Limited interpretability of decisions

Occasionally generates incorrect info

May produce biased outputs

Dependent on high-quality input data

Needs powerful hardware for optimal operation

Training requires significant resources

Potential security risks if misused

Not fully immune to hallucinations

Complexity may deter some users

Ongoing maintenance required

Vulnerable to malicious prompts

Sensitive to task ambiguity

Prone to repetition or inconsistent output

Struggles with certain linguistic nuances

Possible ethical implications

Overreliance on technology raises concerns

Quality varies depending on prompt crafting

Risk of perpetuating stereotypes

Insufficient knowledge in niche areas

Latency might increase when dealing with very long inputs

FAQs

Video Review

Mixtral 8x7B Alternatives

Gemma

A family of open-source, lightweight AI models.

Claude 3.5 Sonnet

Our most capable model yet

Google Nano Banana

Fast multimodal Gemini model for production

Langfuse

Open Source Observability & Analytics for LLM Apps

Grok 2

Our newest AI assistant, powered by X

![FLUX.1 [dev]](https://cdn.brouseai.com/logo/B6GQOp69ZOm-Zte81GQnv.png)

FLUX.1 [dev]

A 12 billion parameter rectified flow transformer capable of generating images from text descriptions

Llama

Discover the power of Llama

![FLUX.1 [schnell]](https://cdn.brouseai.com/logo/gAATMVVzTB-BCugiC3hVp.png)

FLUX.1 [schnell]

The fastest image generation model tailored for local development and personal use

Aicado AI

AI Implementation Hub for Non-Technicals

Sora

Creating video from text

![FLUX.1 [pro]](https://cdn.brouseai.com/logo/LjKDkTabCoBjQ9yqT998X.png)

FLUX.1 [pro]

State-of-the-art image generation with top of the line prompt following, visual quality, image detail and output diversity.

Featured

ChatGPT Atlas

The browser with ChatGPT built in

Sora 2

Transform Ideas into Stunning Videos with Sora 2

Blackbox AI

Accelerate development with Blackbox AI's multi-model platform

Animon AI

Create anime videos for free

AI PDF Assistant

AI PDF Assistant is an intelligent recommendation tool

Kimi AI

Kimi AI - K2 chatbot for long-context coding and research

Abacus AI

The World's First Super Assistant for Professionals and Enterprises