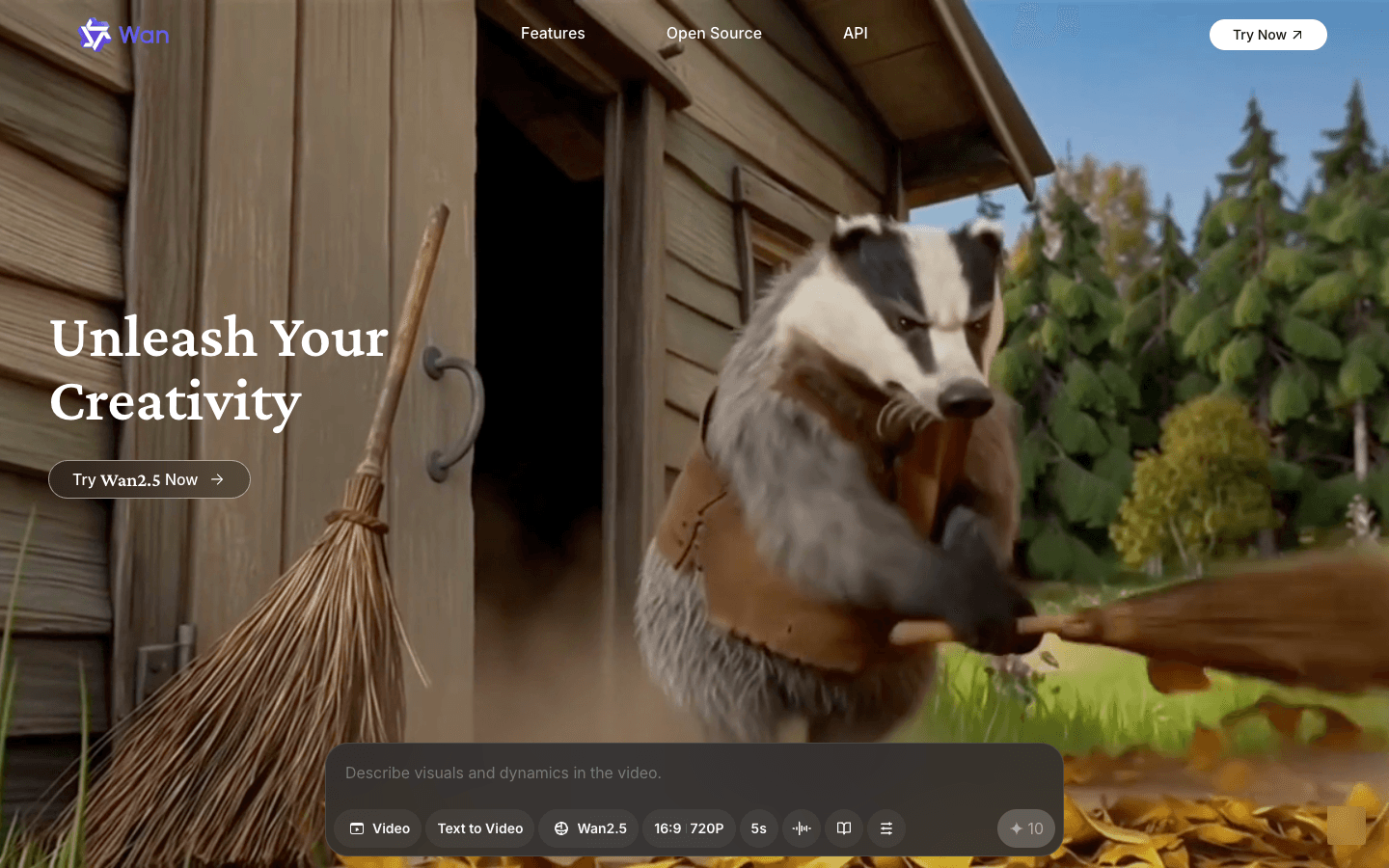

Wan AI

Generate cinematic videos from text, image, and speech

Overview

Wan AI is a leading AI video generation model and platform that turns text, images, and audio into cinematic, production-ready video. Built and maintained at https://wan.video, Wan AI combines open-source research models with a robust API platform to serve creators, developers, and enterprises.

The Wan2.x family (including Wan2.2 and Wan2.5) introduces innovations like a Mixture-of-Experts (MoE) diffusion architecture and a high-compression Wan2.2-VAE to offer superior visual fidelity while keeping inference costs practical. That means users can generate 720P, 24fps clips with rich temporal-spatial dynamics and realistic textures, often on a single consumer GPU such as the 4090.

Wan AI supports multiple generation modes: text-to-video, image-to-video, speech-to-video, text-to-image, and instruction-based video editing. The platform focuses on synchronized audio-visual outputs, high-fidelity voice and ambient sound integration, and accurate on-screen text rendering. Wan2.2 emphasizes cinematic aesthetics via curated aesthetic datasets, improved prompt adherence, and better motion stability compared to previous releases.

For practitioners and researchers, Wan publishes open-source checkpoints and technical details on its GitHub and research pages linked from https://wan.video, enabling reproducible experiments and custom deployments. On the integration side, Wan AI exposes high-performance, developer-friendly APIs for production use. The API supports base64, file, and URL inputs and is designed for high-speed inference through parallel processing and distributed acceleration.

Wan AI is well suited for AIGC product teams building generative video features, marketing teams producing short-form clips, game studios prototyping animated sequences, and research labs exploring multimodal reasoning. Whether you need a quick text-driven promo, an audio-driven character animation, or fine-grained instruction-based edits, Wan AI blends open-source innovation with enterprise-grade APIs to accelerate video creation workflows.

Core Features

- Text-to-video generation with cinematic 720P 24fps output

- Image-to-video synthesis preserving subject and style fidelity

- Speech-to-video that drives lifelike facial and body motion

- Instruction-based video and image editing with dialogue control

- Open-source Wan2.x models for research and local deployment

- Mixture-of-Experts architecture for higher quality, efficient inference

- High-compression VAE enabling fast 720P generation on consumer GPUs

Use Cases

- Marketing: generate 10-15 second product promo clips from copy

- Social media: create short cinematic reels from text prompts

- E-learning: produce narrated explainer videos from scripts

- Character animation: animate avatars using voice clips

- Advertising: prototype multiple ad variations quickly

- Game dev: create in-engine cutscene concepts from images

- Film previsualization: storyboard motion with text-directed scenes

- Localization: generate multilingual video variants with synced audio

- AR/VR content: produce short immersive sequences for testing

- Research: benchmark open-source video models and pipelines

Pros & Cons

Pros

- Open-source models for reproducibility

- Multimodal: text, image, and speech inputs supported

- Cinematic 720P output at 24fps

- MoE architecture improves generation quality

- High-compression VAE enables faster inference

- API-first design for easy developer integration

- Runs on single consumer GPU like 4090

- Strong prompt adherence and visual reasoning

- Accurate on-screen text and realistic textures

- Audio-visual synchronization with high-fidelity voices

- Scalable platform suitable for enterprise use

- Comprehensive documentation and user guides

Cons

- Higher resolution requires more compute

- Longer videos increase generation time significantly

- Some stylized prompts need iterative tuning

- Real-time editing capabilities are limited

- Advanced features require developer integration

- Legal and policy constraints apply to outputs

FAQs

Video Review

Wan AI Alternatives

RunComfy

RunComfy: Top ComfyUI Platform - Fast & Easy, No Setup

Pippit AI

Free AI video generator for e-commerce and social

Wondershare Virbo

Generate Engaging AI Videos in Minutes!

Dzine AI

Controllable AI image and design studio

Google Veo 3

Fast, realistic text-to-video with native audio

Viggle AI

Remix anyone into viral meme videos

Higgsfield AI

Cinematic AI video generator with pro VFX control

Leonardo AI

AI Image Generator for Art, Video & Design

Animon AI

Create anime videos for free

Dreamina AI

Dreamina AI - Text-to-Image and Video Creator

MindVideo AI

Free text-to-video maker with 4K AI effects

Featured

Sora 2

Transform Ideas into Stunning Videos with Sora 2

Abacus AI

The World's First Super Assistant for Professionals and Enterprises

Animon AI

Create anime videos for free

Blackbox AI

Accelerate development with Blackbox AI's multi-model platform

Higgsfield AI

Cinematic AI video generator with pro VFX control

Tidio

Smart, human-like support powered by AI — available 24/7.

Kimi AI

Kimi AI - K2 chatbot for long-context coding and research

ChatGPT Atlas

The browser with ChatGPT built in

AI PDF Assistant

AI PDF Assistant is an intelligent recommendation tool